Latest note

Windows VSTs on Ubuntu Linux with yabridge (and some Pianoteq on Linux notes)

Now that we have Steam Proton to play Windows games, we also have an easy way to run Windows VSTs with Yet Another way to use Windows VST plugins on Linux or (yabridge) by Robbert van der Helm.

It's not a functionality that I really need, as Bitwig has a ton of native devices and the only VST I use all the time Pianoteq has always had native VST/LV2 plugin support. But it's nice to know that some Windows VST bundled with purchases or popular free ones might work on Linux.

If you're familiar with Linux and CLI, the yabridge github page has excellent documentation already.

For Ubuntu Linux:

First install wine-staging from winehq, just follow the instructions on the page. After adding the PPA:

sudo apt install --install-recommends winehq-staging

Download the latest normal release pakage (not the ubuntu version) from the yabridge release page. And extract to .local/share as per yabridge docs. As of this posting it's 3.7.0:

tar -C ~/.local/share -xavf yabridge-3.7.0.tar.gz

Installing VSTs with wine

VST2

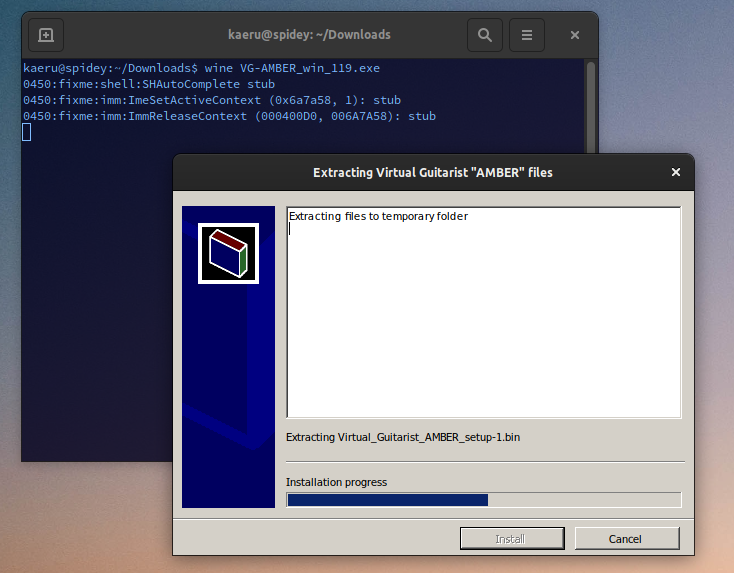

For VST2 eg. UJAM Virtual Guitarist Amber (freebie with Bitwig), after downloading the exe. On the command line where you downloaded it:

wine VG-AMBER_win_119.exe

Take a note on where it's installed, in this case Program Files\Vstplugins\UJAM

Next you need add the path to your installed VST to yabridge.

For those not familiar with wine, it stores all Windows related files in your home directory under ~/.wine and UJAM installed the plugin under ~/.wine/drive_c/Program\ Files/Vstplugins/UJAM

To add the plugin so yabridge knows about it is not complicated, it's mostly using the command yabridgectl:

~/.local/share/yabridge/yabridgectl add~/.wine/drive_c/Program\ Files/Vstplugins/UJAM

One more step with vst2, is that you should make a symlink to your local VST2 dir in ~/.vst If you don't already have it, you can create it and symlink it:

ln -s ~/.wine/drive_c/Program Files/Vstplugins/UJAM ~/.vst/yabridge-ujam

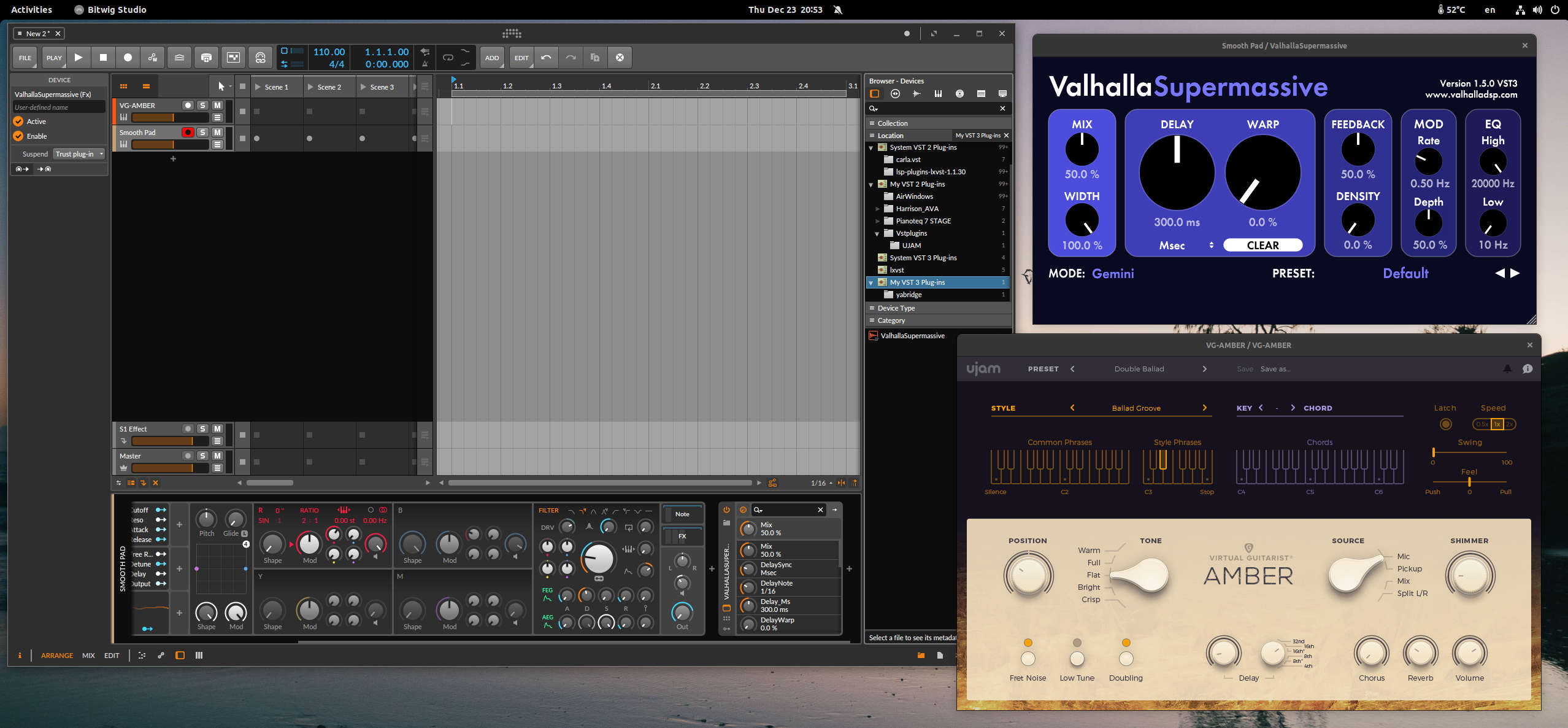

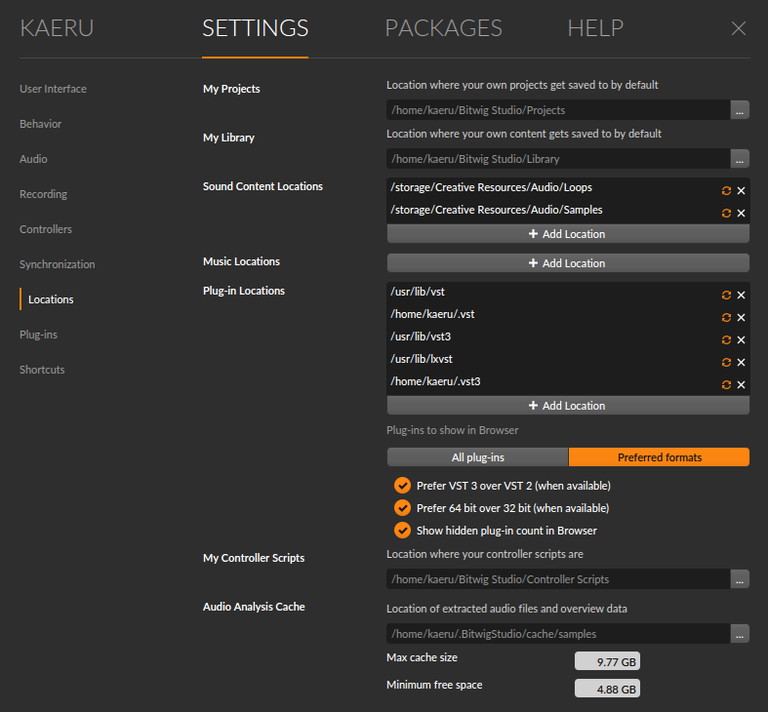

Finally don't forget to add the location of plugins, for Bitwig it's under Settings and Location:

VST3

The steps for Windows VST3 is the same, except you don't have to do the symlink part, yabridge will automatically add it under ~/.vst3

Valhalla SuperMassive a popular free reverb plugin installs as a Windows VST3.

Add VST3 dir to yabridge. Note you only need to do this once for VST3, as all VST3s always install into install this common folder.

~/.local/share/yabridge/yabridgectl add "$HOME/.wine/drive_c/Program Files/Common Files/VST3"

After this step, anytime you install a Windows VST3 in future, you just need to run yabridgectl sync

Latency Notes

To avoid xruns for Amber Guitarist (VST2), I had to increase jack node latency to 512/48000 (21.3ms). Since it's a songwriting tool and it was free, I can live with that. Valhalla Supermassive works perfectly at my normal 128/48000 (5ms) node latency setting.

In trying to troubleshoot this, I learned from the yabridge docs about rtirq and enabling threaded irqs with RT kernels. Enabling it by editing grub.conf and leaving defaults as is, allows me to run node latency as low as 32/48000 (1.3ms) for playing instruments with some layering or backing loops. Even more stable at 64/48000(2.6ms) which is really good.

As per the rtirq docs, you enable threadsirq by editing /etc/default/grub

GRUB_DEFAULT=0

GRUB_TIMEOUT_STYLE=hidden

GRUB_TIMEOUT=0

GRUB_DISTRIBUTOR=`lsb_release -i -s 2> /dev/null || echo Debian`

GRUB_CMDLINE_LINUX_DEFAULT="quiet splash acpi_enforce_resources=lax"

GRUB_CMDLINE_LINUX="threadirqs"

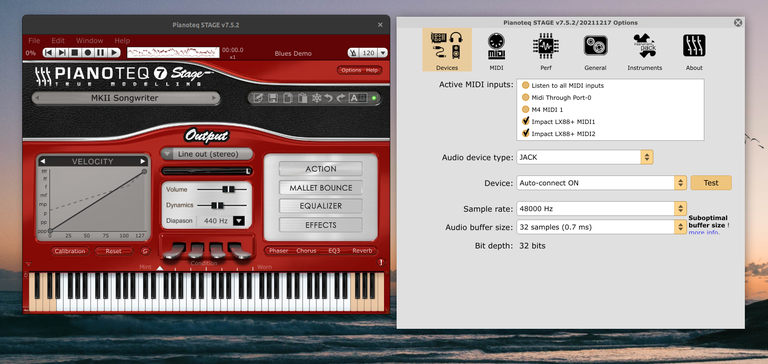

Configuring Pianoteq for Lower Latency with Pipewire and Gnome Desktop

Pianoteq on Linux comes with a standalone binary/app without .so. I copy this to my ~/.local/bin directory

When I just want to play the piano standalone, without DAW, my audio interface and CPU can easily handle the lower latency.

Per JACK application latency is easy to configure with pipewire.conf, there is already an example. For Pianoteq, you need to add this in ~/.config/pipewire/jack.conf:

# client specific properties

jack.rules = [

{

matches = [

{

# all keys must match the value. ~ starts regex.

client.name = "Pianoteq"

}

]

actions = {

update-props = {

node.latency = 32/48000

}

}

}

]

Pianoteq Desktop Icon/Launcher

Pianoteq doesn't have an installer for Linux, but all it's missing is a simple XDG desktop file and icon. You can quickly create one in ~/.local/share/applications. Don't forget to give it right permissions of chmod 755.

[Desktop Entry]

Type=Application

Encoding=UTF-8

Name=Pianoteq

Comment=Piano Virtual Instrument

Exec=/home/kaeru/.local/bin/Pianoteq #replace this with where you put your standalone Pianoteq binary

Icon=pianoteq

Terminal=False

Stephen Doonan made a quick icon you can grab here. Save it as pianoteq.png in ~/.local/share/icons

You install the .desktop file with xdg-desktop-menu command

xdg-desktop-menu install Modartt-pianoteq.desktop

Testing it it all works

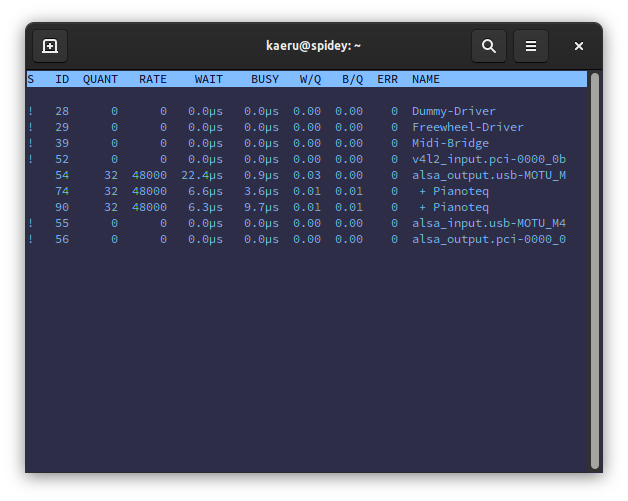

Pipewire comes with pw-top command that can how you the buffer and rate (node.latency) per application/device. The matching rules can be seen here, and so far 0 errors, which usually means there are no xrun/buffer issues. If you see ERR numbers, increase your node.latency setting to 64/48000 or 128/48000.

Pianoteq recommends multiples of 64, and 32 is below that so it's saying it's sub-optimal for CPU usage.