Latest note

Local AI with AMD Radeon 9070 XT on Ubuntu Linux 25.04 with ROCm 6.4.1

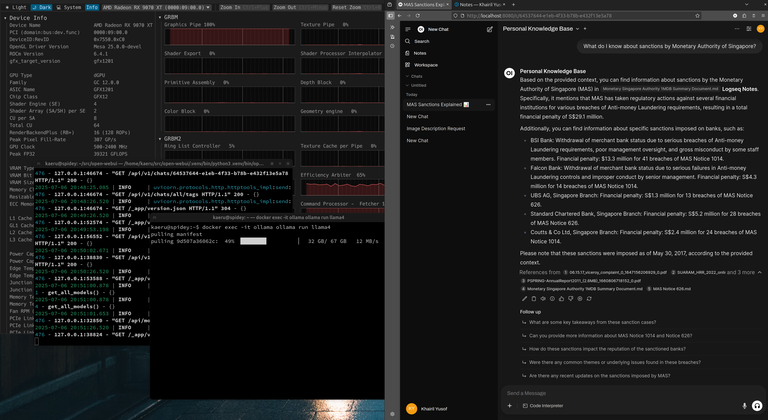

Previously, I shared that I wasn't expecting AI stuff to work without issues on the RX 9070 XT with ROCm 6.4.1 on unsupported Ubuntu version and kernel 6.14.

Well everything I've needed for local AI with popular servers and tools all seem to work just fine so far.

Ollama docker just works.

docker run -d --device /dev/kfd --device /dev/dri -v ollama:/root/.ollama -p 11434:11434 --name ollama ollama/ollama:rocm

llamacpp HIP build works without issues, just switch the target to gfx1201

vllm for rocm also builds and runs fine.

All the various tools that depend on pytorch like docling.

16GB of VRAM is OK but I've quickly run into limits with loading larger models without any form of optimisation or quantisation.

I think the upcoming R9700 with 32GB might be better for compute and AI tasks, but whether that's affordable or not remains to be seen. If AMD and partners are able to sell a 32GB card at a pricing point in between gaming and enterprise, which is widely available, they might have a winner.

For now I'm quite happy at the current status of AMD's open source stack support for compute and AI on Linux for consumer GPUs. Some quick wins already for me to be able to use AI on my local documents and knowledge base.

And it looks like I've got a good working base to build on more specific and custom uses for my work around investigative journalism, but also day to day productivity. Will share more on those use cases now that I've got a working setup for R&D.