Processing Scanned Documents with AI

Even in 2022, we're still getting badly scanned Malaysian government documents, especially important documents submitted as evidence to parliamentary enquiries or audits.Â

From the Parliamentary Public Accounts Committee we can get very detailed reports running into hundreds of pages, often marked as official secrets and but now made public. Previously we would clean it up and then run OCR so as much of the text is at least searchable and make it much more accessible.

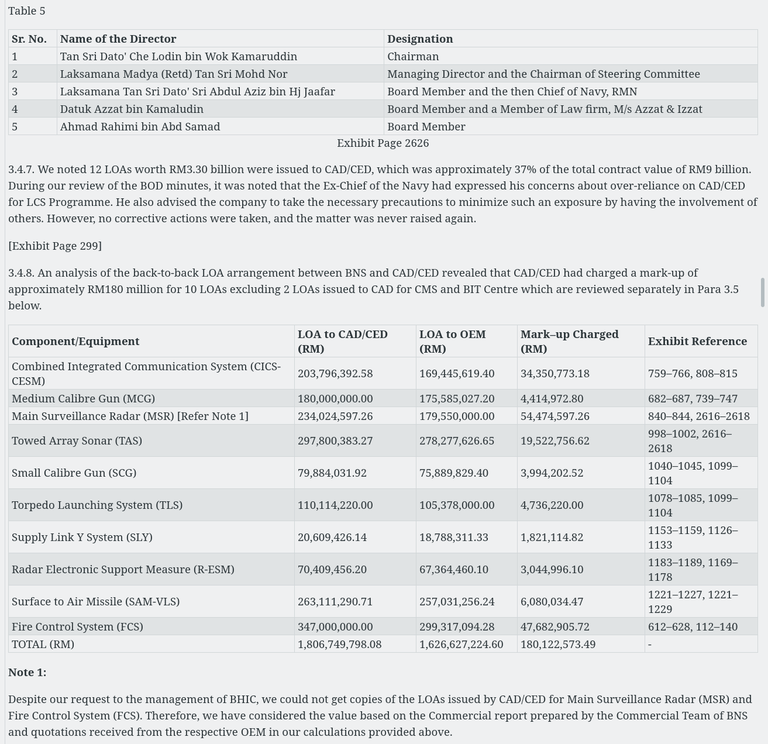

With current AIÂ visual language models (VLM)Â we can take it one step further and extract structured content and images, including tables and even describe information conveyed in charts. Unfortunately despite the hype, it's not at the point yet where you can just dump a bunch of documents and it will all automagically work.Â

Unfortunately, there still a few problems as not all models work well with all formats.

- Many models will randomly drop large amounts of text or see them as images, and the descriptions can fail because it's just partial bits of text and not a chart or table, and may not entirely make sense.

- Structure numbered outlines as tables.Â

- Repeated token loops, causing failures or very long processing times

Currently the model that works best for badly scanned documents, signboards etc. for Malaysian context, is Qwen2.5VL especially the 7B parameter model. Unfortunately it has a major issue of getting into very long repeating loops. Hopefully there will be Qwen3 VL model soon that might fix this.

For now one solution is to use good old Unix shell utilities and commands.Â

Step 1: We use Scantailor to clean up the document as much as possible, such as clipping headers/footers, text clarity, deskewing and more. As part of the process you will also split the document into separate pages. See my guide to Cleaning Scanned Documents.

Step 2: We batch convert the cleaned up the processed image files (default is the out directory) into individual PDFs.

Step 3:Â ls *.tif | parallel convert {} {.}.pdf

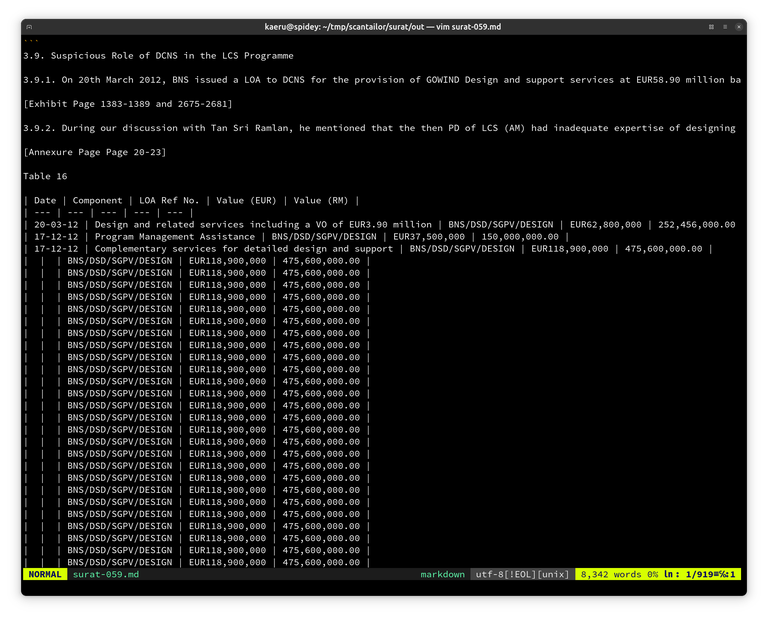

Step 4: Process with docling-cli page by page to Markdown using Qwen2.5vl. I did a quick and dirty patch to docling to support Qwen2.5vl:7b with Ollama. When processing page by page, even with repeated content, there isn't enough bad content to cause it to fail, compared trying to process entire document in one go.

for i in $(ls *.pdf);

do

docling -v --pdf-backend dlparse_v4 --pipeline vlm --vlm-model qwen25_ollama --enable-remote-services --ocr-lang en,ms --to md $i

done

Step 5: Merge the individual markdown files into one with two line breaks in between pages.

for f in $(ls *.md);

do cat "$f"; printf '\n'; printf '\n';

done > merged.md

Finally we have the structured markdown, ready to be used with other document processing tools such as entity extraction, analysis by LLM or for RAG.

- Test Documents and Pages for testing

- Example complete processed document "Surat-kepada-Sekretariat-PAC-&-Laporan-Audit-Forensik-LCS-Programme" in markdown format.

Â

Â

Â

Document Actions