Cleaning up Documents with LLMs

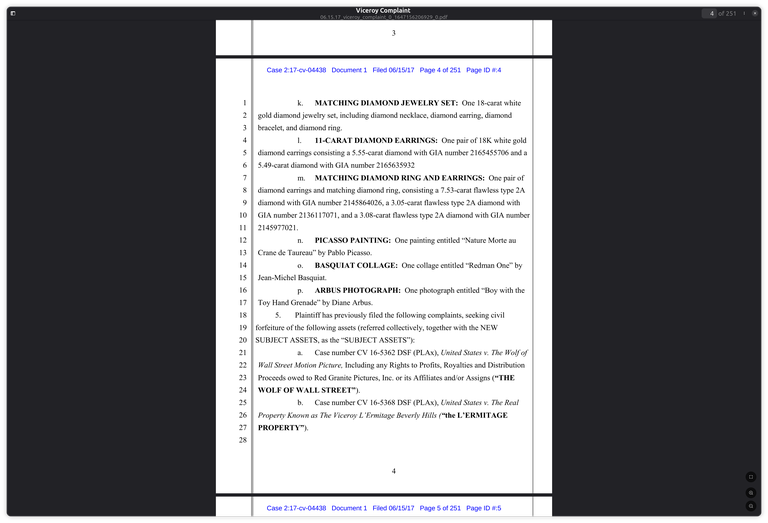

Quite often documents have standard formatting elements that doesn't convert neatly into structured text. When you use tools to convert documents into structured text like docling, the default output can result in some strangely formatted text or unwanted elements. US legal documents for example, have repeating line numbers for each page. This results in markdown files with numbers like this:

1 2 3 4 5 6 7 8 9 10 11 ...

Repeated throughout the document.

There are multiple ways to deal with something like this.

- Craft a custom pipeline to identify and drop the region of numbers (if region boundaries can be detected by VLM)

- Use LLM with custom prompt to reformat and clean up the document

- Use old fashioned regex with search and replace to quickly fix formatting for repeated patterns

Writing a custom pipeline can be time consuming one time investment, but worth it if you're going to deal with a lot of US legal documents. Something I don't need right now.

LLM prompts can be quite good.

You can use an LLM to generate the prompt, by describing your problem, and it'll come up with something like this.

"You are an expert document parser and editor. Your task is to clean and format a US legal document that has been converted to Markdown, resulting in duplicated, extraneous, and incorrectly placed content, specifically line numbers." "Please perform the following steps to clean the text: "You are an expert document parser and editor. Your task is to clean and format a US legal document that has been converted to Markdown, resulting in duplicated, extraneous, and incorrectly placed content, specifically line numbers." "Please perform the following steps to clean the text: 1. Remove all extraneous content, specifically duplicated line numbers. These often appear at the beginning of lines or scattered throughout the text. 2. Consolidate any fragmented text that was separated by the removed line numbers to restore the original flow of paragraphs and clauses.

3. Preserve all paragraph numbers, eg. 1. 2. 3. 4.

4. Preserve all existing Markdown formatting (e.g., paragraph numbers, headings, bolding, lists, and links) used to structure the document.

5. Crucially, preserve the exact legal text; do not paraphrase, summarize, or alter the substance of the document in any way.

6. Output the final, cleaned text in standard Markdown format.

This can actually work quite well for small amounts of text that are a few pages long. And you can fine tune the prompt or fix additional errors until it looks right. However it doesn't work well for very large documents, such as legal filings which can be 200 pages long. This will be a very compute intensive task and can take quite a lot time with no guarantee of success. LLMs are also prone to randomly dropping text or introducing errors, which would be very hard to eyeball in a 200 page document. And an error prone court document is pretty much useless for me.

The third option can be the best one, especially if there repeated patterns, like what I was facing. Here it can be better to use a coding LLM to help generate the regex you need for random clean up tasks.Â

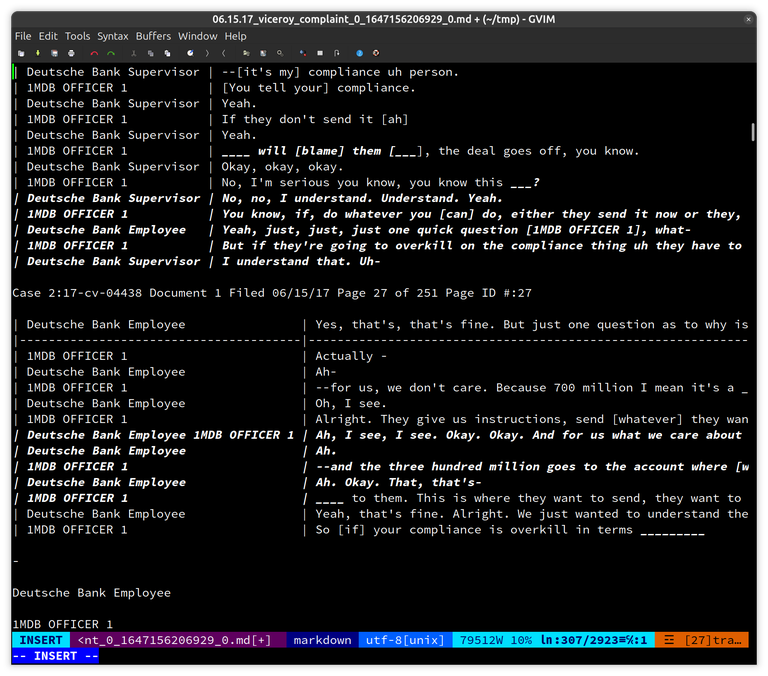

For the following text and numbers, give me example of a vim search and replace command to find numbers and remove the line and following blank line from a text document ...

:g/^\d\+$/d

Which takes milliseconds to remove the pattern for all 200 pages of text.

Using AI to help you with tried and tested methods of regex and scripts for working with text and data, can be much more helpful for productivity. For example, instead of brute forcing and asking an LLM to extract every address in a large document or set of data, you can simply get it to suggest regex that would match that pattern and script in whatever scripting language you are familiar with. Python, Perl, spreadsheet formula and what not.

Using AI to help me more with various custom scripting tasks is something that I'm going to be exploring next.Â

Document Actions