AI Augmented Investigative Journalism

My recent personal workstation upgrades and ROCm explorations, was mostly due to the need to explore and keep up to date with AI developments. To understand what works now, what won't work and what to prepare for things that will likely work in the future for investigative journalism and government transparency.

A key thing for me is that it has to be on an open source stack and be able to run on commodity hardware. Happy to see that a lot of work in this space for Local AI is following these principles. It took me a bit of time to figure out the parts, that were relevant to me.

- LLM model server. Ollama works best, it's widely supported, runs on any platform, supports AMD ROCm and can switch between models with a single server instance.

- UI + framework. Need an interface to interact with all the language models, extend with tools, functions, local documents, notes etc. Open WebUI works for me and many others as well it seems.

- Local documents and data. Need the models to work with document and data locally. (Learned that the term for this is RAG)

These projects are all quite well engineered and documented, making deploying and troubleshooting errors relatively easy. The fact that almost all of them are Python based, made it even easier due to familiarity. Testing these things, also meant updating my local Python development environment and toolkit with uv and using docker more extensively for testing and development. This was a nice bonus. I'm not a full time developer, so finding any time to update development environment is a good thing.

An updated development environment was quite important, especially with ROCm in which you often had to use ROCm specific versions of libraries like torch and dealing with specific python versions and dependencies. When you can get things to work quickly, exploration and learning becomes fun.

Augment not replace

My first approach was to feed the model with my knowledge base, and test it for queries for answers that I already have very well organised in Logseq. This didn't work too well. While extensive as seen from the graph animation below, the notes are quite diverse and sparse. I can find things I need quickly directly in Logseq, and the connections often make sense in my mind, not so for LLMs. So initial impression was disappointing, a lot of compute power, with a lot of errors in the responses.Â

Knowing that my notes system is way faster, got me thinking, how about using AI to speed up my note taking and organisation?

I already know what I'm looking for, and have shared various ways on how to piecing together data and information from a variety of seemingly disconnected sources.

So how about we speed this up instead with AI?

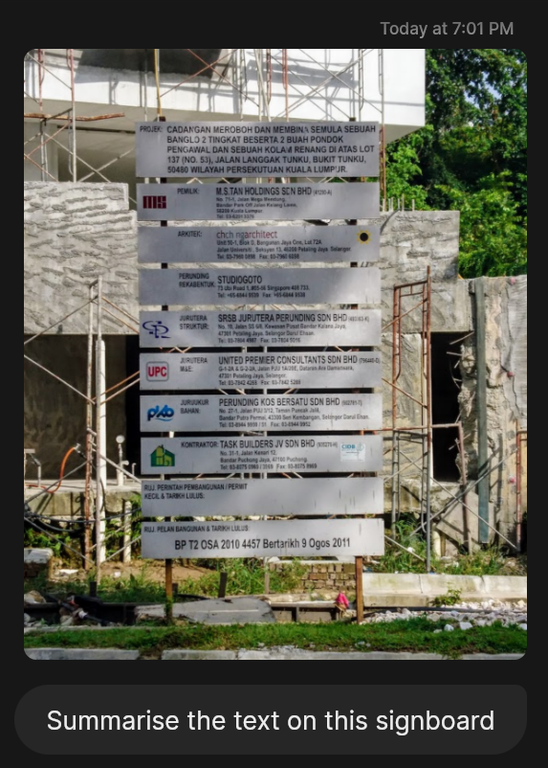

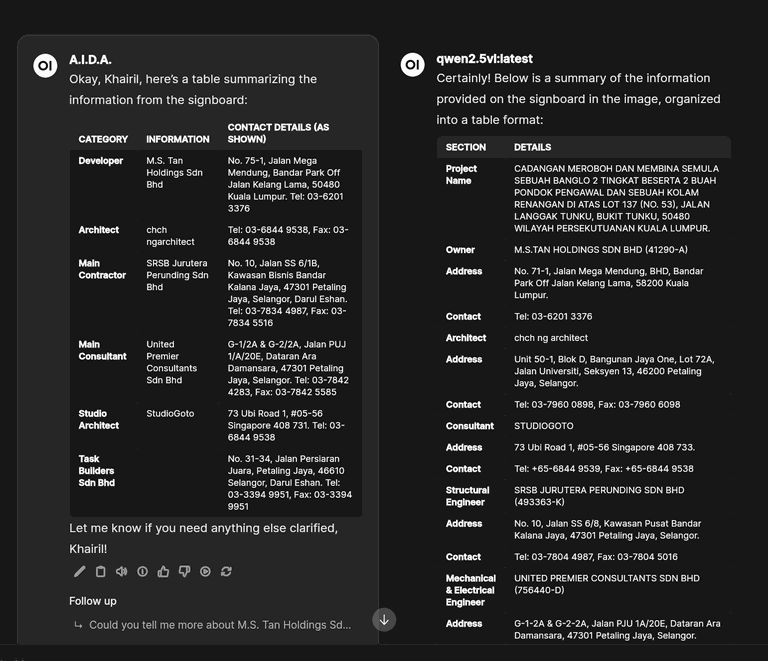

Low hanging fruit. Extract and structure content/data from unstructured sources

Getting structured data or text from images and documents is something AI tools do really well. With translations if needed, and can convert it into structured text or data. The newer visual models like Qwen2.5vl are really good at this. You can even do side by side models, to compare for accuracy in Open WebUI. This speeds up random tasks a lot for me.

The results added to Logseq, allows me to add them quickly as notes, along with any connections, and notes I might add in a way that I know allows me to search and access information fast.

Other similar tasks include things like extracting a timeline of events into a table. While it can sometimes do things like sort by date etc. I find it's best to just do this in a spreadsheet. LLMs aren't always that great at this, and prone to strange errors. Once information is structured by an LLM into formats like CSV, use the right tools like spreadsheets to work with them.

When it does work, you also know which models work best already for the task, and can then quickly generate code to a batch script for you. Say you get a source to go around taking and sending you a bunch of photos from these signboards, office organisation charts and what not.

Â

Â

Additional external Internet sources

Extending Open WebUI with it's built in web search, tools and functions allow you to use other sources to quickly find key people, companies and relationships, from public sources. You can automate summarising key parts of search results, such as those found on my Malaysian Investigative Tipsheet.

Will try to work through and create more custom search tools for investigations for Open WebUI to share. This was something I also liked about WebUI, it's very easy to create and customise specific models and sources. I found restricting context to related materials with the right models, will give much more useful and accurate results.

RAG on larger sets of documents and datasets

While Open WebUI's built-in internal RAG works quite to quickly get a collection of documents imported for RAG, if you want to analyse a larger set of documents, it didn't handle it too well, even a single large document. Looking around around for solutions for large scale RAG documents, I found two options, Microsoft GraphRAG and LightRAG.

LightRAG has quite a good UI for managing documents, works with Ollama and also has Ollama API support that allows you to easily integrate it into Open-WebUI as another model you could query. It also provides for editing relationships through the API or the web interface.

Docling

LightRAG can handle a few different documents, even audio, but it doesn't do OCR yet. Which is fine, and brings us to another very useful tool, Docling, from IBM that specialises in parsing documents and preparing them as structured text/data ready for AI or other document processing tasks.

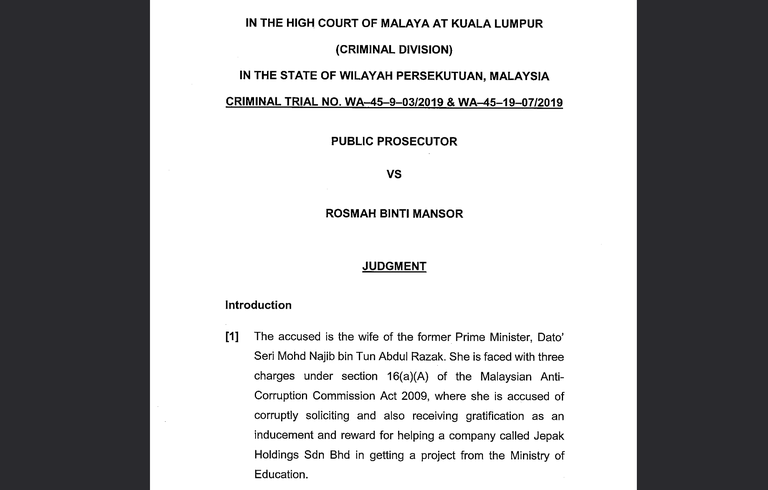

This is also where, previous efforts in preparing scanned documents to be converted to text, are helpful and may still be needed. This test document, Rosmah vs PP full court judgement isn't too bad, but the effort to clean up others in the past, makes these documents ready for AI processing.

Example docling command line:

docling --pipeline vlm --device cuda --num-threads 32 --ocr-engine tesseract --ocr-lang msa,eng --image-export-mode referenced Full\ judgment\ PP\ v\ Rosmah\ Mansor.pdf (this works with ROCm btw)

Docling will not just do OCR to recognise text, but will also format it nicely structured in Markdown format, complete with tables if any, and images.

This clean structure format is what LLM's work really well with, and we can import it via LightRAG's web interface.

LightRAG

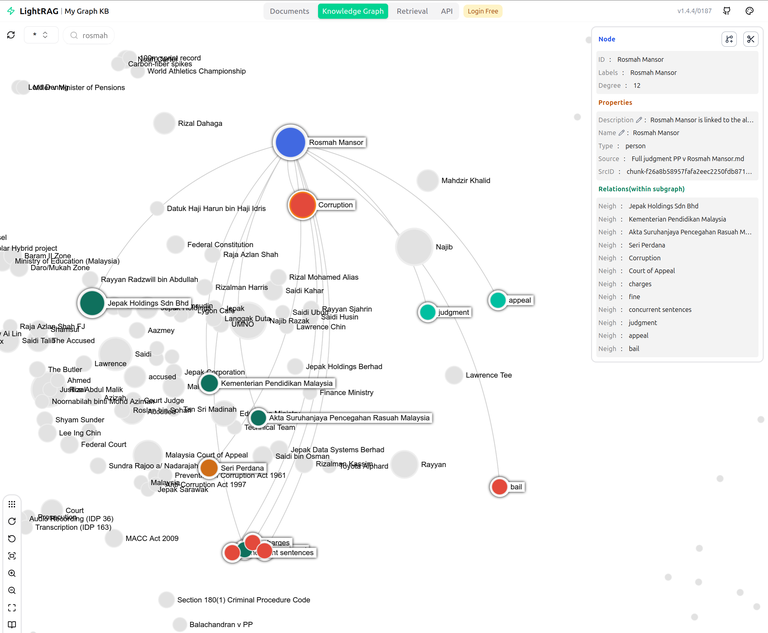

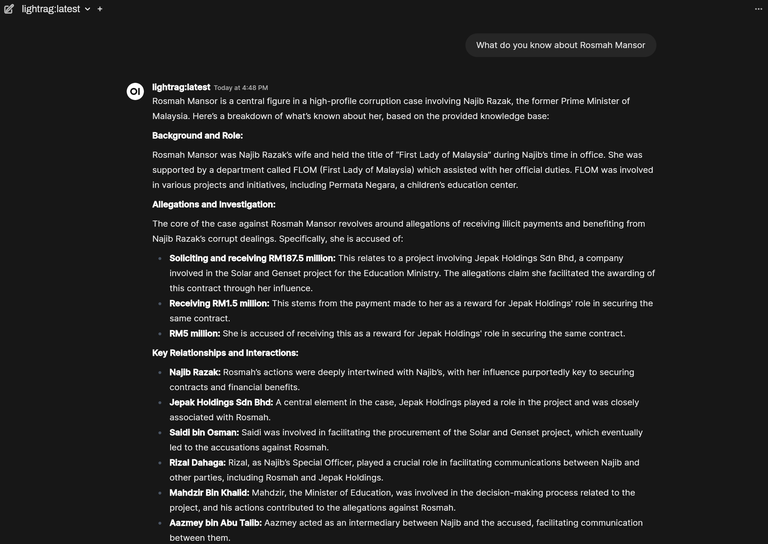

When you import it into LightRAG, it will process documents based on the LLM model you choose, convert it into embeddings used by RAG and LLMs, extract entities, merge them when needed and provides a nice knowledge graph. Even with a smaller model and limited context length, the preliminary results are impressive. Previously, it was mentioned that it integrates with Open WebUI due to support for Ollama API. You can therefore, easily add it as an external model and query it just like you do with other LLM models.

This is all preliminary exploration, but I can already see how it would help investigative journalists, quickly search, verify and organise key information with help of AI tools and LLM, augmenting their existing methods and tools. No need to type out details in photos. It'll translate it too if it's in a different language.

If I'm looking for something specific, I don't need to go through dozens of court documents. I can just ask if there is any information I need, including additional insights. With RAG, because sources are provided, I can quickly verify the results. Since the results are returned in structured Markdown format, I can also copy and paste the results in Open WebUIÂ into my notes or spreadsheets.

I'll be presenting some of this work at the upcoming Third International Conference on Anti-Corruption Innovations in Southeast Asia in Bangkok next month, and share more details of real examples, on how this supplements past work on investigating corruption cases around Politically Exposed Persons and Beneficial Ownership.

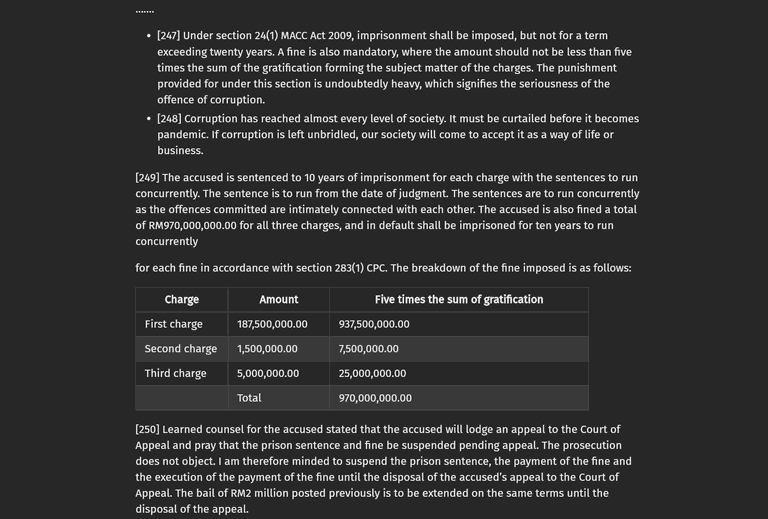

Document Actions